Comprehensive cost tracking, 30-day forecasting, benchmarking, and ROI measurement for AI agents. Get real-time visibility into your LLM spending and optimize your AI operations.

Your AI agents are running 24/7, but you can't see where the money is going. Costs spike unexpectedly, you're paying for redundant API calls, and there's no way to forecast spending or measure ROI. Without proper cost tracking, you're flying blind—wasting budget on inefficient operations and missing opportunities to optimize.

LLM costs are buried across multiple vendors and services. You can't see which agents, models, or operations are driving your spending. Unexpected bills arrive with no breakdown or explanation.

You can't predict costs for the next 30 days. Budget planning is guesswork. You're either over-provisioning and wasting money, or under-provisioning and hitting limits unexpectedly.

You can't measure the return on investment for your AI agents. Are they delivering value? Which agents are profitable? Without ROI metrics, you're investing blindly in AI without knowing if it's working.

Track every dollar spent across all your LLM vendors in real-time. Get accurate 30-day cost forecasts based on historical data and usage patterns. Benchmark your costs against industry standards and measure ROI for every AI agent. Make data-driven decisions to optimize spending and maximize value.

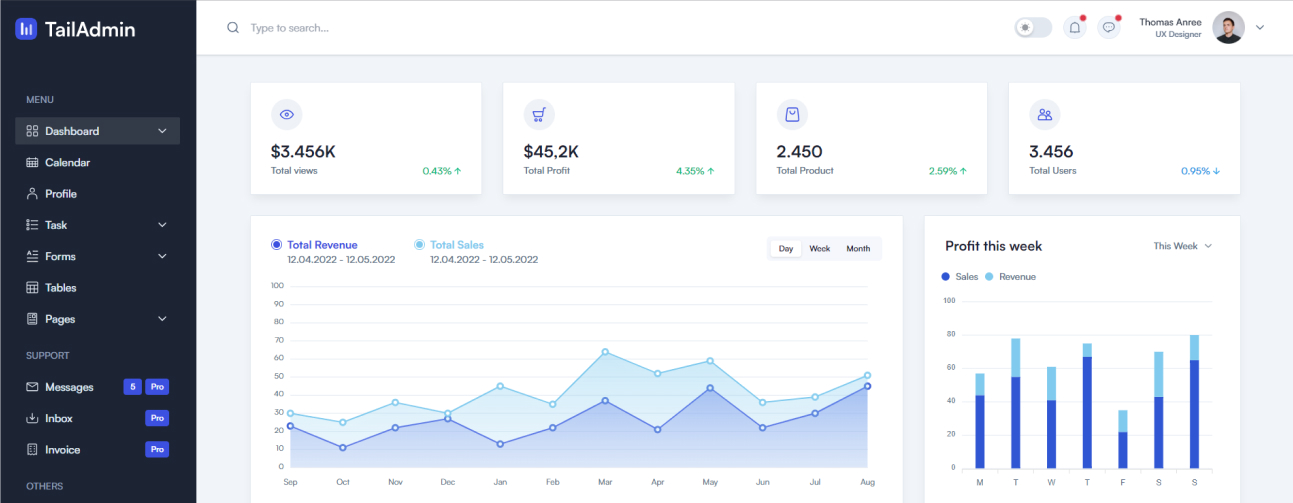

Monitor all LLM and API costs in a single unified dashboard. Track spending by agent, model, vendor, and operation. Get instant alerts when costs spike unexpectedly. Automatic instrumentation with OpenTelemetry for seamless tracking.

Predict your LLM spending for the next 30 days with machine learning-powered forecasts. Plan budgets accurately, avoid surprises, and optimize resource allocation. Forecasts update in real-time as usage patterns change.

Measure the return on investment for every AI agent. Benchmark your costs against industry standards. Identify which agents deliver the most value and optimize underperforming ones. Track cost vs. utilization to eliminate waste.

Multi-vendor cost tracking, automated forecasting, cost alerting, utilization analysis, and comprehensive ROI reporting for AI agents.

Consolidate all LLM and API costs from OpenAI, Anthropic, Google, and other vendors into a single unified view. Track spending by model, agent, project, and customer. Get detailed cost breakdowns with automatic categorization.

Learn MoreGet accurate 30-day cost forecasts powered by machine learning. Forecasts update in real-time based on usage patterns, seasonal trends, and growth projections. Plan budgets with confidence and avoid unexpected bills.

Learn MoreReceive instant alerts when costs spike unexpectedly or exceed thresholds. Detect anomalies in spending patterns before they become problems. Set custom alerts by agent, model, or project to stay in control.

Learn MoreMeasure the return on investment for every AI agent. Benchmark your costs against industry standards. Identify high-value agents and optimize underperformers. Track cost vs. utilization to eliminate waste and maximize efficiency.

Learn MoreTrack costs across all vendors, forecast spending for the next 30 days, benchmark against industry standards, and measure ROI for every AI agent. Get real-time alerts, detailed analytics, and actionable insights to optimize your LLM spending and maximize value.

Get a Demo

Monitor spending in real-time, forecast costs for the next 30 days, benchmark performance, and measure ROI across all your AI operations—from customer support bots to code generation agents.

Monitor all LLM spending across OpenAI, Anthropic, Google, and other vendors in a single unified dashboard with automatic cost categorization.

Machine learning-powered forecasts predict your spending for the next 30 days, helping you plan budgets and avoid surprises.

Compare your costs against industry standards. Identify optimization opportunities and ensure you're getting the best value.

Measure return on investment for every AI agent. Track cost vs. utilization, identify high-value agents, and optimize underperformers.

Get real-time cost visibility, accurate 30-day forecasts, and comprehensive ROI analytics. Start optimizing your AI operations today.

Get StartedSee how teams are using cost tracking to optimize their LLM spending and maximize ROI.

Find answers to common questions about LLM cost tracking, forecasting, benchmarking, ROI measurement, and how to optimize your AI spending.

Our platform automatically tracks all LLM and API costs across multiple vendors (OpenAI, Anthropic, Google, etc.) using OpenTelemetry instrumentation. Costs are categorized by agent, model, project, and customer, giving you complete visibility into where every dollar is spent. Real-time dashboards show spending trends, anomalies, and detailed breakdowns.

Our machine learning-powered forecasting models analyze historical usage patterns, seasonal trends, and growth projections to provide highly accurate 30-day cost predictions. Forecasts update in real-time as usage patterns change, typically achieving 90%+ accuracy. You can set confidence intervals and receive alerts when actual spending deviates from forecasts.

We track costs per agent, per operation, and per customer, then correlate spending with business outcomes like revenue generated, tasks completed, or customer satisfaction scores. Our ROI dashboard shows which agents deliver the most value, cost per outcome metrics, and identifies optimization opportunities. Benchmark your performance against industry standards.

We support all major LLM vendors including OpenAI (GPT-4, GPT-3.5, etc.), Anthropic (Claude), Google (Gemini, PaLM), Cohere, and more. Our platform automatically tracks costs across all vendors and models, giving you a unified view of spending regardless of which APIs you use. New vendors and models are added automatically as they become available.

401 Broadway, 24th Floor, New York, NY

getcortefy@gmail.com